Tutorial: Implementing JSON parsing (Rust)

Learn the fundamentals of parsing by implementing JSON parsing from scratch

Intended Audience:

- People who would like to learn about parsing

- Anyone who wants a hands-on gentle introduction to the Rust programming language

Recommended Proficiency Level:

- Comfortable with any statically typed language

- Familiar with a terminal / command line

- No prior Rust experience is needed

Learning Method:

This is a hands-on tutorial, just reading through won't get you much. You'll get the most out of it by actually firing up a code editor and following along!

The source code is available at: https://github.com/davimiku/json_parser

Introduction

In this tutorial, we will learn how to implement JSON parsing from scratch, using the Rust programming language.

We will create a library, so that users of our library can pass in the JSON string and they'll receive back parsed JSON.

This article is split into two parts:

- Introduction, Setup, and Tokenizing

- Parsing

The code for this article uses a procedural/imperative style, and should be familiar for anyone coming from languages using those styles. We won't get into advanced Rust features, and every new concept will be explained in detail.

To understand "JSON parsing", we'll first ensure we understand "JSON" and "parsing" in the next sections.

What is JSON?

JavaScript Object Notation (JSON) is a format for exchanging and storing data. As the name implies, the syntax is based on JavaScript, but it is a language-independent data format. The currently published Internet Standard is RFC 8259.

The possible data types in JSON are fixed (a closed set), meaning that the format itself is not extensible. There are exactly six possible data types that can be transmitted via JSON, also described in the previous link.

null- boolean:

trueorfalse - string:

"a string" - number:

2,-2,2.5,2E+32,2e-16 - array:

[ json_value, json_value, ... ] - object:

{ "key": json_value, ... }

The "array" and "object" types are recursive - they contain other JSON values.

What is Parsing?

In programming, "parsing" is transforming less-structured input into more-structured output by applying knowledge of the expected shape(s) of the data.

This transformation from less-structured to more-structured might fail if the input isn't compatible with the desired output.

This is a fairly flexible interpretation, and perhaps a little vague, so some breakdown on the statement above:

- less-structured input examples:

- A

Stringis a common input format for text-based data, which is often described as a sequence of characters (what is a "character"? An entire article could be written on this) - A stream of bytes, for example audio data that is parsed by some audio player program

- A

- more-structured output examples:

- JSON structure - rather than a flat sequence of "characters", it now has an organized representation with the six data variants discussed in the Introduction

- HTTP request - An incoming HTTP request is a stream of bytes, but it can be parsed into a more-structured representation that has the method, path, protocol, headers, body, etc.

- knowledge of the expected shape:

- We can't parse a less-structured input without knowing what it possibly could be

- We apply that knowledge when parsing, to check if the input adheres to the expected shape

- might fail:

- If the input doesn't align to the expectations, then the parsing fails

- Example: If the incoming String is not in JSON format, then it is not JSON and the parsing fails

- Example: If the incoming POST data to the

/api/invoiceendpoint does not have the fields defined for anInvoice, then it is not anInvoiceand parsing fails - Parsing failures are not exceptional. Any time we deal with data from outside of our program, the possibility of parsing failure is as equally plausible as parsing success

Project Setup

Enough talk, let's get started!

Development Environment Setup

The Rust compiler is available on all popular operating systems. If you don't already have it installed, head over to rust-lang.org and follow the instructions to get started for your operating system. This setup page also has links to activating Rust support in various code editors/IDE.

Personally, I use Visual Studio Code (VS Code) running in Windows Subsystem for Linux (WSL), but this tutorial can be followed on any operating system and code editor that Rust supports.

Project Setup

We're going to create a library for our JSON parsing. In your command line, navigate to the directory where you want this project to exist. For example: ~/tutorials/rust. Run the following command to generate a new Rust library project:

cargo new --lib json_parsing

This creates a new folder, json_parsing, that contains the new Rust project.

Why create a library?

From the documentation for cargo new, there are two options for creating a new project: --bin for binaries (applications) and --lib for libraries.

What we're designing and building here is the core part of the JSON parsing that could be used by multiple consumers. Example consumers could include a command-line application (CLI), a web application server, a desktop application, and more. This means that we need to carefully consider the public API of our library that is available to these potential consumers, and this is a very important part of libary design.

I do think there are an abundance of tutorials and resources out there for application design, but comparatively fewer resources for library design, possibly a topic for a future article.

Implementation

Open the newly created project folder in your favorite IDE / text editor. The file that we'll start with is named src/lib.rs.

src is the conventional folder name for "source code", and lib.rs is the default entry point for the library. There is already sample code in this file, including a function and a test. You can delete this code if you want, we won't need it.

First Thing: Define the Data

It can be intimidating to get started on a blank module. My advice for this scenario and pretty much any scenario in any language where you start from scratch is: define the data first.

In the Introduction, we said that a JSON value is one of six distinct variants:

- null

- boolean

- string

- number

- array

- object

Let's create a data type to model this! Rust uses an enum to represent a closed set of possible variants. From the Rust Programming Language book:

enums give you a way of saying a value is one of a possible set of values

(Keep the link to that book open, this is called "The Book" and is the official and the best overall resource for getting started with Rust!)

Go ahead and start adding our enum to the lib.rs file. We'll start with the "basic", non-recursive types.

/// Representation of a JSON value

enum Value {

/// literal characters `null`

Null,

/// literal characters `true` or `false`

Boolean(bool),

/// characters within double quotes "..."

String(String),

/// numbers stored as a 64-bit floating point

Number(f64),

}

New Concept: enum keyword

As mentioned above, the enum keyword defines a data structure where each instance must be

exactly one of the defined variants. "The Book" link is provided above and more information can be

found in the technical reference.

New Concept: Triple slash comments

Rust uses triple slash (///) for documentation comments. When you hover on the type name or

variant name (or any type/function/value), the corresponding comment will be shown to you.

Rust has additional features built-in to documentation comments such as Markdown formatting and even automatic testing! Read more in Documentation tests.

The enum keyword here defined the name of the type (Value) and the possible variants it can have. We named these variants as Null, Boolean, String, and Number.

Null: no associated dataBoolean: has the associated RustboolString: has the associated RustString- It's OK they can have the same name, one is the variant name we're giving it and the other is the associated data type

- If you'd like to use a different name for the variant, feel free as well

Number: has the associated Rustf64(64-bit floating point number)

Enum variants have an "OR" relationship, meaning that a Value is a Null OR Boolean OR String OR Number.

If you've used enum from some other languages such as C#, Java, etc. the concept of the payload/data with the variant may be new.

We can associate any data we want with the variant. This concept has been around for decades, apparently originating in the 1970s in Pascal or perhaps older, but has only recently been making its way into current "mainstream" languages. These are called "sum types" and are a necessary and fundamental part of accurately modeling data. To read more, check out Wikipedia or an article Sum Types are Coming.

Let's add the two remaining variants now, Array and Object.

enum Value {

// ...snip...

/// Zero to many JSON values

Array(Vec<Value>),

/// String keys with JSON values

Object(HashMap<String, Value>),

}

...snip... ?

Throughout the tutorial, I use comments with ...snip... that indicate that this particular code is building on a previous code snippet.

These two variants are interesting because they are recursive -- Array and Object contain other Value inside of them. Thankfully, this is pretty easy to represent in Rust, as seen above.

Array: the associated data isVec<Value>, pronounced as a "vector of Value(s)"- A Vec is a growable array type, analogous to

ArrayListin Java,Listin Python,vectorin C++,Arrayin JavaScript, etc. - It is "recursive", because the array can contain any other JSON value

- A Vec is a growable array type, analogous to

Object: the associated data isHashMap<String, Value>- A map between string keys and JSON values, which also makes this recursive

...and done! Users of our library will pass us some JSON string, we'll parse it and give them back this Value. Therefore, this Value data type is part of the public API of our library. Since it is part of our public API, let's make it public with the pub keyword.

pub enum Value {

// ...snip...

}

The Value enum in our public API is a closed set that users of our library cannot extend.

A note on the Open-Closed Principle

Data types created by the enum keyword are not extensible, thus perhaps violating the Open-Closed Principle, the "O" from the "SOLID principles".

Software entities (classes, modules, functions, etc.) should be open for extension, but closed for modification.

However, our software entity here (the Value enum) is purposely not open for extension. The possible variants of JSON values are a fixed set, which allows for exhaustive checking, and the guarantees provided by our library rely on this not being extensible. Deconstructing SOLID design principles has interesting remarks on this principle.

New Concept: pub keyword

The pub keyword indicates that the following type/function/module/constant is publicly

available. Read more about Visibility and

Privacy for the various

possibilities.

Knowledge Check

- What are the valid data types in JSON?

- What is an

enumin Rust? - Is there another way we could have expressed the boolean values in our

enum?

Answers

- There are exactly six valid data types in JSON: Null, String, Number, Boolean, Array, and Object.

- An

enumin Rust is a sum type, which models something that can be exactly one of a set of variants. - Instead of a Boolean variant with

booldata, we could have made two separate variants for True and False. What are the tradeoffs for this approach?

Setting up for Tokenizing

The next step is to implement the tokenizing (also known as "lexing"). Paraphrasing from that article:

In computer science, tokenizing is the process of converting a sequence of characters into a sequence of lexical tokens (strings with an identified meaning)

Since this is almost a self-referencing definition, let's use an example:

{

"nums": [1.2, 3.4]

}

This could be processed into the following lexical tokens:

LeftBrace

String("nums")

Colon

LeftBracket

Number(1.2)

Comma

Number(3.4)

RightBracket

RightBrace

Note that these tokens have a linear structure, i.e. we have a sequence of tokens.

Defining the Token type

Now we'll define the Token type, such as is seen in the previous section.

The Token is another closed set of variants, the grammar of JSON is fixed. Open up JSON.org again, what are the possible variants of the tokens?

Let's start with the "punctuation" kind of tokens. First, create a new file named tokenize.rs next to lib.rs.

Next, inside of lib.rs, declare the new module.

// in lib.rs

mod tokenize;

New Concept: mod keyword

The mod keyword declares a new module.

This may be unfamiliar depending on experience in other programming languages where the module tree is determined by the file system. In Rust, the module tree is explicitly declared by the programmer. Read the article Clear explanation of Rust’s module system for additional explanation.

Now that tokenize.rs has been declared a module, we can write code in it. We'll add the first few "punctuation" variants for Token.

// in tokenize.rs

pub enum Token {

/// `{`

LeftBrace,

/// `}`

RightBrace,

/// `[`

LeftBracket,

/// `]`

RightBracket,

/// `,`

Comma,

/// `:`

Colon,

}

Oh my brackets!

Here I've used "Brace" for the {} and "Bracket" for the []. This is a matter of preference, RFC 8259 defines these as "left/right square bracket" and "left/right curly bracket". Feel free to use that instead if you want 🙂

Next, let's define the literal tokens, which are null, true, and false.

pub enum Token {

// ...snip...

/// `null`

Null,

/// `false`

False,

/// `true`

True,

}

We have tokens that can contain variable data, which are numbers and strings.

pub enum Token {

// ...snip...

/// Any number literal

Number(f64),

/// Key of the key/value pair or string value

String(String),

}

As we saw earlier with the Value enum, it's fine to have a variant with the same name as the type of data it contains. This is a stylistic preference, if you prefer these to be different, that's fine too.

Knowledge Check

We used a single token to represent numbers, for both integers and floats by using Rust's f64, which is a 64-bit floating point representation.

What does the JSON specification say about integers? What are the tradeoffs of using a different token? Or having the Number variant itself be an enum with Integer and Float variants?

Define the public API

As stated earlier, tokenizing is taking a sequence of "characters" and turning that into a sequence of tokens. In Rust terminology, that is String -> Vec<Token>.

Let's start that top-level tokenize function now. Use the fn keyword to define a function and refer to the chapter in "The Book" on functions.

// in tokenize.rs, above the Token enum

pub fn tokenize(input: String) -> Vec<Token> {

Vec::new()

}

This is the top-level function that is public from this module (pub keyword).

New Concept: :: (double-colon) syntax

The :: is the "path" operator for accessing members of a namespace/scope. In this case, it is accessing a function called new that is associated to Vec. Rust uses :: for accessing members of a namespace/scope and . to access members of a value (we'll see this later).

Some other languages also use :: for a similar purpose. Ruby, for example, uses :: as the scope operator and . as the message operator (sending a message to an object). Some other languages use . for both accessing members of a namespace/scope and for accessing members of a value.

Writing Tests

Now that we have a function (well, just the start of one), we can write our first test!

Up to this point, we've been introducing only one new concept at a time. However, being able to test your code is very important because it'll give you quick feedback and the ability to actually run your functions without leaving your editor. Let's rip the band-aid off and show the necessary concepts for running a unit test.

// in tokenize.rs, below the Token enum

#[cfg(test)]

mod tests {

use super::{tokenize, Token};

#[test]

fn just_comma() {

let input = String::from(",");

let expected = [Token::Comma];

let actual = tokenize(input);

assert_eq!(actual, expected);

}

}

This will have a compiler error on the assert_eq! line. Hovering over the message, there are actually two compiler errors (paraphrased and simplified below).

- binary operation

==cannot be applied to typeVec<Token> Tokendoesn't implementDebug

The first error is because Token cannot be compared for equality with another Token, Rust doesn't generate this automatically. The second error is if the test fails, Rust will try to show you the expected result vs. actual result, but it can only do that if a Debug representation is available. To keep the scope of this tutorial smaller, I won't go into more detail but please feel free to read more in the Derivable Traits chapter of the Rust Book.

To resolve these compiler errors, add the following code:

// in tokenize.rs, directly on the Token enum

#[derive(Debug, PartialEq)]

pub enum Token {

// ...snip...

}

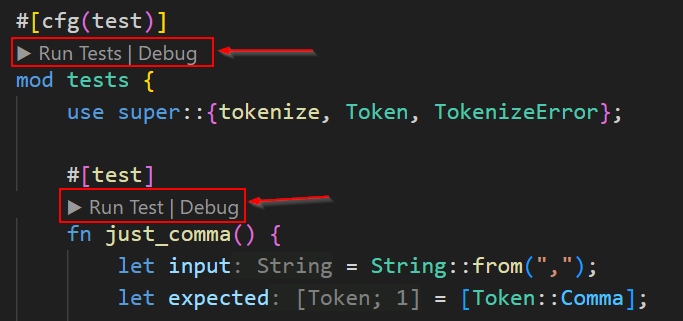

This provides Token with the ability to be compared for equality and to have a Debug representation for printing. If all is well, a "Run Test" button should appear next to the test function that you can click in VS Code. If you're not using VS Code or it's not working for some reason, you can run tests with the cargo test command.

Go ahead and run the test! It fails... which is expected because we haven't actually implemented anything. You should see something like this in your test output.

running 1 test

thread 'tokenize::tests::comma' panicked at 'assertion failed: `(left == right)`

left: `[]`,

right: `[Comma]`',

Note that the output message uses "left" and "right" to be as general as possible. Many people have settled on using the order of "actual, expected" which will be used in this article as well.

Whether you strictly adhere to Test Driven Development (TDD) principles or not, it's generally still a good idea to make sure a test fails before it succeeds - to avoid having tests that pass when they shouldn't due to a bug in the test itself.

Now, going back to explain the new concepts from the unit test.

New Concept: mod keyword

More of an extension of the existing module concept we are aware of, modules can be defined within a file. A file can contain multiple modules if desired.

As stated in the previous section on modules, the programmer explicitly defines the module tree, it is not implicit based on the filesystem. Also, the parent module defines its child module(s). In this case, the tokenize module defines a child module named tests.

New Concept: attributes

The #[something] syntax in the code example is an attribute. This is metadata that changes how the compiler uses the following entity (module, type, function, constant, etc.).

#[cfg(test)] means - "Only compile this code for tests". The module is available for tests but will not be included in a production build.

#[test] marks a function as being a unit test, the compiler will add some extra properties to it so that the test runner can find it and execute it.

New Concept: use and super keywords

The use keyword brings entities (module, type, function, constant, etc.) into the current scope.

The super keyword when used in a path refers to the parent module. In this case, the current module is tokenize::tests and the parent module of that is tokenize.

Go ahead and add other tests as you see fit. For example:

// inside of `mod tests`, among the other tests

#[test]

fn all_punctuation() {

let input = String::from("[{]},:");

let expected = [

Token::LeftBracket,

Token::LeftBrace,

Token::RightBracket,

Token::RightBrace,

Token::Comma,

Token::Colon,

];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

I generally follow the "AAA" test style, which is Arrange-Act-Assert.

- Arrange: set up the input data and expected result

- Act: run the function

- Assert: check the result

Implementing the Tokenizing

Time to actually implement the tokenizing!

Wait, what were we doing again? Oh that's right, we were here:

// tokenize.rs

pub fn tokenize(input: String) -> Vec<Token> {

Vec::new()

}

We're going to use a procedural approach:

- Initialize an index variable to

0 - While the index is less than the length of the string:

- Get the "character" at that index

- Process the "character" into a

Token - Add the

Tokento our output - Increment the index variable

- Return the output with all the

Tokens

What is a Character?

There's one problem though... Rust doesn't allow direct indexing into a String.

fn cant_index_into_string(string: String) {

let example = string[42]; // you can't do this

}

Try it yourself! You get a compiler error message that basically says this:

the type `String` cannot be indexed by `{integer}`

the trait `Index<{integer}>` is not implemented for `String`

We haven't learned what traits are yet, so just focus on the first line of the error message. A String cannot be indexed? Why not?

What should the following evaluate to? Translate this and try it in your favorite language, what do you get?

let example_1 = "🦀🦀🦀"

example_1[1]

let example_2 = "🏳️🌈"

example_2[1]

check in your browser console

You can check JavaScript's behavior in your browser console (F12 to open the console in most browsers).

In JavaScript, the '🦀🦀🦀' string is actually 12 bytes long! Each crab (U+1F980) is 4 bytes, bytes 0-1 are U+D83E and bytes 2-3 are U+DD80. These two together are known as a "surrogate pair", with a high surrogate and low surrogate, and together represent the crab emoji. This is how languages that use UTF-16 encoding represent values that can't fit in 16 bits.

The TextEncoder Web API can be used to check this to see how many bytes are in these strings.

console.log('🦀🦀🦀'.length) // 6

console.log(new TextEncoder().encode('🦀🦀🦀').length) // 12

console.log('🏳️🌈'.length) // 6

console.log(new TextEncoder().encode('🏳️🌈').length) // 14

The rainbow flag is actually not just one "character", it's three: 🏳️ (U+2690 white flag) + ZWJ (U+200D Zero Width Joiner) + 🌈 (U+1F308 rainbow). Fun!

All of this is to explain the context behind Rust's design decision - you cannot index into strings.

If you're interested in learning more about this topic, please check out The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets, How Unicode Works: What Every Developer Needs to Know About Strings and 🦄, and JavaScript has a Unicode problem. If you can explain why UTF-16 has the worst trade-offs of the different encoding schemes, then you've got it!

Looping through Characters

So we can't index into a string directly, what can we do?

We can convert the String into something that we can index into directly.

// tokenize.rs

pub fn tokenize(input: String) -> Vec<Token> {

// replace the function body

let chars: Vec<char> = input.chars().collect();

let mut index = 0;

let mut tokens = Vec::new();

while index < chars.len() {

// now we can get the character using: chars[index]

index += 1;

}

tokens

}

The first thing we do is collect() the string into a vector of characters. The Rust char datatype is 4 bytes, and thus can safely represent any code point. The rest of the code is the standard bookkeeping needed to loop in a procedural fashion.

New Concept: mutability

The mut keyword allows a variable to be mutable (it can change). By default, all values in Rust are not mutable.

The variable chars does not need to be mutable, because we are never modifying it. index needs to be mutable because we will increment it as we work through the input, and tokens needs to be mutable because we will push in Tokens as we generate them.

New Concept: usize

Hover over the index variable to see its type - it is type usize.

usize is one of the many integer types in Rust, and it means "unsigned integer that is the size of a pointer".

- unsigned means no negative numbers

- you are likely reading this article on a 64-bit machine, so the size of a pointer is 64 bits and therefore a

usizeis 64 bits. There are other machine architectures with different sizes

usize is typically used for indexes, as in this case accessing elements of a Vec by index

Finally, make a Token

Add two lines into the tokenize function, and create a new function.

// tokenize.rs

pub fn tokenize(input: String) -> Vec<Token> {

// ...snip...

while index < chars.len() {

let token = make_token(chars[index]);

tokens.push(token);

// ...snip...

}

// ...snip...

}

fn make_token(ch: char) -> Token {

match ch {

'[' => Token::LeftBracket,

']' => Token::RightBracket,

'{' => Token::LeftBrace,

'}' => Token::RightBrace,

',' => Token::Comma,

':' => Token::Colon,

_ => todo!("implement other tokens"),

}

}

This matches on the "punctuation" kind of tokens, and the _ matches all other possibilities. Go ahead and run the unit test from before, it should pass! Add more unit tests for the punctuation tokens if you'd like.

If you haven't seen match or a similar "pattern matching" feature in a language before, for this particular example you can think of it like a more concise way of writing this:

if ch == '[' {

Token::LeftBracket

} else if ch == ']' {

Token::RightBracket

} else if ch == '{' {

Token::LeftBrace

} else if ch == '}' {

Token::RightBrace

} else if ch == ',' {

Token::Comma

} else if ch == ':' {

Token::Colon

} else {

todo!("implement other tokens")

}

Pattern matching happens in more places than just match expressions and has more capabilities than just an equality check, more can be found in the Patterns and Matching chapter of the Rust book.

New Concept: todo!()

todo!() is a macro that's built into Rust, in this case, you can think of it like a built-in function. It allows your code to compile so you can run your code without everything being totally finished, which helps with prototyping and testing things out. I often will sketch out my module by defining the data, then defining the functions with input/output types and todo!() for the body. This allows for fast prototyping while also being explicit of what needs to be finished later.

If the code actually reaches the todo!() at runtime, the program panics.

Literal Tokens

Next up are the three literal tokens, which are null, true, and false.

Let's add those to the match block.

fn make_token(ch: char) -> Token {

match ch {

// ...snip...

'n' => todo!("implement `null` token"),

't' => todo!("implement `true` token"),

'f' => todo!("implement `false` token"),

// ...snip...

}

}

...and Houston we have a problem. In our current design, there's no way to move on to the next letter of a literal (u for null, r for true, and a for false). It worked previously because the punctuation tokens have a 1:1 relationship between character and token. This is not true for the rest of the tokens, we need a new design.

There are a few ways to go about this. One way is to create a Finite-State Machine that tracks states such as "Is Tokenizing Null Token" and looks for the appropriate letters. You'd also need to keep track of which letters have been processed so far.

Another approach is to give the make_token function the ability to increment the index on its own, because this function will know when to increment it. This requires changing our function.

The Elephant-sized Crab in the Room: Ownership and Borrowing

We now get to see the most famousest pair of hobbits in the Rust Shire, ownership and borrowing!

The Rust book linked will describe this much better, but essentially:

- Ownership: The owner of the data can decide what to do with it

- Borrowing: Entities that are not the owner can borrow the data

Consider the data we have so far. We have chars and index which are owned by the tokenize function. The make_token function needs this data so that it can crunch through the characters that it needs to create the next token. Let's see how that concept translates to Rust.

// update the signature of make_token

fn make_token(chars: &Vec<char>, index: &mut usize) -> Token {

// This won't compile now, we'll fix that next

// ...snip...

}

This example shows both kinds of borrows in Rust (it's almost like I planned it!).

The &Vec<char> is a shared (immutable) borrow. This means that multiple entities can borrow this data but they can't mutate it. The &mut usize is an exclusive (mutable) borrow. This means that only one entity can borrow this data, but they are allowed to mutate it. Recapping the syntax/symbols:

&: shared (immutable) borrow&mut: exclusive (mutable) borrow

The Golden Rule of Rust borrowing is:

A borrow can be shared, or a borrow can be mutated, but not both.

This concept might be familiar to you already as a "best practice" from other languages - i.e. "don't mutate shared state". If that's the case, you might be able to understand this pretty quickly as its really an encoding of that best practice into the type system of the language. However, if you're like me who wasn't exposed to this kind of thinking before, you may struggle to understand it at first. That's OK! Patient and practice will prevail.

Optional concept: slices

Normally, the Vec wouldn't be borrow with &Vec<char>, it would actually be borrowed as a slice, which is written as &[char]. This is essentially a "view" into the data that is owned by the Vec. It's not necessary to learn this concept for this tutorial, so we'll continue to use the &Vec<char> version even though you wouldn't normally see that in production code.

Feel free to use a slice instead if you're comfortable.

Let's make the code compile again, we can dereference the index using the * operator and get the character at that index.

fn make_token(chars: &Vec<char>, index: &mut usize) -> Token {

let ch = chars[*index];

// ...snip...

}

New Concept: dereferencing

Dereferencing is first discussed in the References and Borrowing section of the Rust book.

When you have a value behind a reference, the unary prefix * operator can be used to get that value. Unary means this operator takes one argument (unlike binary * used for multiplication) and prefix means the operator comes before the argument.

There's more to dereferencing than this, discussed in the Rust book in the Smart Pointers section, but this is not necessary to know for this tutorial.

Let's add a function that looks for the characters "n", "u", "l", "l" to tokenize the literal null.

fn make_token(chars: &Vec<char>, index: &mut usize) -> Token {

let ch = chars[*index];

match ch {

// ...snip...

'n' => tokenize_null(chars, index),

't' => todo!("implement `true` token"),

'f' => todo!("implement `false` token"),

// ...snip...

}

}

fn tokenize_null(chars: &Vec<char>, index: &mut usize) -> Token {

for expected_char in "null".chars() {

if expected_char != chars[*index] {

// uh oh, what do we do here?

}

*index += 1;

}

Token::Null

}

We've come to one of the major concepts in every programming language, which cannot be avoided.

Error Handling

If we try to tokenize the following (not) JSON:

nooooooooooooo

It will first see the "n" and then check if it's the full token null, but in this case it's not! So what should we do?

In Rust, we don't throw an Exception and hope that someone else catches it. There are a few reasons for not doing that in this scenario:

- Remember that we are building a library. Throwing an Exception from a library will affect the control flow of the application that calls our library. This is an intrusive thing for a library to do because it breaks the API boundary between the library and the application.

- Exceptions in many languages affects the "honesty" of the function's return type. For example, in C# or TypeScript, the function might claim that it returns a

Tokenbut it actually only sometimes returns aToken. The core issue is that any function may not return what it says that it returns. Eric Lippert (standardization committee of C# and former C# designer) calls these Vexing Exceptions. - The library should not decide what is exceptional, it is the application that is using the library that decides what is exceptional. It is the application that is aware of the domain it is being used for, whereas the library needs to be general and not make assumptions.

- In this particular case of JSON parsing, bad data is not exceptional at all. Any time that data is ingested from outside the system, you need to assume that the data might be bad. This is not exceptional, thus an Exception should not be used.

So what do we do instead? We will return a data structure with two possibilities - the function succeeded or the function failed.

This is a closed set (succeed or fail, no other variants), so based on what we've learned so far, what type of data is used? If you said enum, that's right! Rust has this kind of data structure built-in to its core library, called Result. This is an enum with two possible variants, named Ok and Err.

/// One of the possible errors that could occur while tokenizing the input

#[derive(Debug, PartialEq, Eq, Clone)]

pub enum TokenizeError {

/// The input appeared to be the start of a literal value but did not finish

UnfinishedLiteralValue,

}

fn tokenize_null(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

for expected_char in "null".chars() {

if expected_char != chars[*index] {

return Err(TokenizeError::UnfinishedLiteralValue);

}

*index += 1;

}

Ok(Token::Null)

}

First, we defined an enum for ourselves to define the possible error variants. We'll come back to this and define more variants as we go. Second, we updated the tokenize_null function to return a Result containing a Token (when Ok) or containing a TokenizeError (when Err).

Now that tokenize_null can fail, so can make_token.

fn make_token(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let ch = chars[*index];

let token = match ch {

// ...snip...

'n' => match tokenize_null(chars, index) {

Ok(token) => token,

Err(err) => return Err(err),

},

// ...snip...

};

Ok(token)

}

We changed these parts of make_token:

- The return type to indicate it can fail by returning a

Result - We

matchon the Result of thetokenize_nullfunction, because it can either succeed or fail. If it fails, we go ahead andreturnthat error, otherwise we grab theTokenfrom inside theResult. - At the bottom of the function, we return the

Tokeninside of theOkvariant.

New Concept: error propagation operator (?)

The match expression we just added in the previous step is a bit wordy. You can imagine we would have to do the same thing for any tokenizing that can fail, which will get repetitive and verbose. We can replace match expressions like that one with the ? operator.

This ? operator creates an "Error Propagation Expression". This expression propagates (i.e. "passes up") the error if it's an Err, otherwise it unwraps the Ok value. We can now replace that match expression with the ? operator:

fn make_token(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let ch = chars[*index];

let token = match ch {

// ...snip...

'n' => tokenize_null(chars, index)?,

// ...snip...

};

Ok(token)

}

Similarly, we'll also need to change the tokenize function, because this can also fail.

// tokenize.rs

pub fn tokenize(input: String) -> Result<Vec<Token>, TokenizeError> {

let chars: Vec<char> = input.chars().collect();

let mut index = 0;

let mut tokens = Vec::new();

while index < chars.len() {

let token = make_token(&chars, &mut index)?;

tokens.push(token);

index += 1;

}

Ok(tokens)

}

Notice how changing something from infallible (can't error) to fallible forces you to change all of the function signatures until the point where that error can be handled. Coming from TypeScript or C# where the function signatures pretend that errors don't exist can definitely make this explicit notation feel verbose/wordy, but the explicitness and strictness has benefits for the long-term readability and maintainability of the code.

We can now write the other functions for tokenizing the true and false literal values.

fn make_token(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let ch = chars[*index];

let token = match ch {

// ...snip...

'f' => tokenize_false(chars, index)?,

't' => tokenize_true(chars, index)?,

// ...snip...

};

Ok(token)

}

fn tokenize_false(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

for expected_char in "false".chars() {

if expected_char != chars[*index] {

return Err(TokenizeError::UnfinishedLiteralValue);

}

*index += 1;

}

Ok(Token::False)

}

fn tokenize_true(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

for expected_char in "true".chars() {

if expected_char != chars[*index] {

return Err(TokenizeError::UnfinishedLiteralValue);

}

*index += 1;

}

Ok(Token::True)

}

The tokenize_null, tokenize_false, and tokenize_true all look quite similar. The only differences are the literal string being compared and the variant of the Token that is returned.

How would you implement a tokenize_literal function that could handle all of these cases? What are the tradeoffs of doing this?

Let's add some additional tests now, and feel free to add more as appropriate.

// inside of `mod tests`, among the other tests

#[test]

fn just_null() {

let input = String::from("null");

let expected = [Token::Null];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

#[test]

fn just_false() {

let input = String::from("false");

let expected = [Token::False];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

#[test]

fn just_true() {

let input = String::from("true");

let expected = [Token::True];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

Go ahead and run these tests, they should all pass. However, then add this test. What happens?

// inside of `mod tests`, among the other tests

#[test]

fn true_comma() {

let input = String::from("true,");

let expected = [Token::True, Token::Comma];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

Oops! The test failed.

running 1 test

thread 'tokenize::tests::true_comma' panicked at 'assertion failed: `(left == right)`

left: `[True]`,

right: `[True, Comma]`'

So what happened? We need to trace the flow of our program over time and track its state (or use a debugger!).

tokenize has a main loop where it calls make_token, then increments the index by 1. This is necessary to avoid an infinite loop, after tokenizing a character such as , the index needs to be incremented to move on to the next character.

OK, all good there. However, make_token in this case calls tokenize_true, which also increments the index after each letter of "true". This causes the index to be incremented by one extra!

There are 2 hard problems in computer science: cache invalidation, naming things, and off-by-1 errors.

~Phil Karlton, maybe

There are a few ways this can be solved in the tokenize_true function:

- Only increment the index if

expected_char != 'e' - Something fancy like convert the iterator to a Peekable, and peek ahead to determine whether to increment the index or not

- Decrement the index at the end of the function

- Remove the increment in the main loop of

tokenize. Increment the index variable in each of the match arms for the single-byte characters

Recall the previous design question of whether tokenize_null, tokenize_false, and tokenize_true should instead be replaced by a general tokenize_literal function. Would any of these options not work in that case?

Idea #3 is pretty simple to implement, and can be done as shown below:

fn tokenize_true(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

// ...snip...

*index -= 1; // index is incremented in the main loop

Ok(Token::True)

}

The same solution needs to be applied to tokenize_false and tokenize_null (or tokenize_literal, if you chose to generalize these functions!). Afterwards, the tests that we had written will now pass. Feel free to add more tests as it seems appropriate.

Numbers

One of the six kinds of JSON tokens are numbers. In a JSON document, they appear like numbers in almost any other programming language you've used:

1.23

Reminder: the code snippet above is a valid JSON document on its own. JSON documents do not need to start with square brackets or curly braces.

We'll add another case to our main match expression, this time matching characters that are digits.

fn make_token(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let ch = chars[*index];

let token = match ch {

// ...snip...

c if c.is_ascii_digit() => tokenize_float(chars, index)?,

// ...snip...

};

Ok(token)

}

New Concept: match guards

The if keyword after the match variable is a match guard. This arm of the match expression is only matched if the match guard is true.

We've introduced a new function, tokenize_float. Time to implement this:

fn tokenize_float(chars: &Vec<char>, curr_idx: &mut usize) -> Result<Token, TokenizeError> {

let mut unparsed_num = String::new();

let mut has_decimal = false;

while *curr_idx < chars.len() {

let ch = chars[*curr_idx];

match ch {

c if c.is_ascii_digit() => unparsed_num.push(c),

c if c == '.' && !has_decimal => {

unparsed_num.push('.');

has_decimal = true;

}

_ => break,

}

*curr_idx += 1;

}

match unparsed_num.parse() {

Ok(f) => Ok(Token::Number(f)),

Err(err) => Err(TokenizeError::ParseNumberError(err)),

}

}

// add this import to the top of the file

use std::num::ParseFloatError;

// add this new variant to the enum

pub enum TokenizeError {

// ...snip...

/// Unable to parse the float

ParseNumberError(ParseFloatError),

}

There's a lot going on here, so we'll break it down.

- First, we set up a couple of mutable local variables

numberandhas_decimal. Since they are marked asmut, we know these will be changed over the course of the function. - The main loop of the function walks through the characters, starting at the current index.

- If the character is a digit, we add it to our string

- If the character is a decimal point and we haven't seen a decimal point yet, we add that to the string and flip the mutable flag variable

- Otherwise, break the loop

- Finally, parse the string to a float, and handle if that parsing fails.

The match expression at the very end of the function is fairly ugly, and repeats Ok/Err in the arms. It was written this way to use the concepts we already learned and avoid adding new concepts.

Look up Result::map and Result::map_err in the Rust standard library documentation and see if you can re-write this match expression to use those instead to clean up the code.

Let's add a few tests for numbers.

// inside of `mod tests`, among the other tests

#[test]

fn integer() {

let input = String::from("123");

let expected = [Token::Number(123.0)];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

#[test]

fn floating_point() {

let input = String::from("1.23");

let expected = [Token::Number(1.23)];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

Does this cover everything?

hint: Nah

Our tests don't cover negative numbers.

// inside of `mod tests`, among the other tests

#[test]

fn negative_integer() {

let input = String::from("-123");

let expected = [Token::Number(-123.0)];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

Does this test pass? If not, how can you fix it?

Are there any other cases for numbers from JSON.org that we missed?

hint: Yeah

What does "E" mean in a number?

how many nested spoilers can we have?

Alright, I'm done now

Strings

Time for the last kind of Token, and we are almost done with tokenizing!

JSON strings look like this:

"a string"

The string starts with a " character and includes all characters until the next " character.

fn make_token(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let ch = chars[*index];

let token = match ch {

// ...snip...

'"' => tokenize_string(chars, index)?,

// ...snip...

};

Ok(token)

}

Here's the implementation:

fn tokenize_string(chars: &Vec<char>, curr_idx: &mut usize) -> Result<Token, TokenizeError> {

let mut string = String::new();

while *curr_idx < chars.len() {

*curr_idx += 1;

let ch = chars[*curr_idx];

if ch == '"' {

break;

}

string.push(ch);

}

Ok(Token::String(string))

}

- Create a mutable string buffer to push the characters into

- Loop through the available characters, adding every character to the buffer until the next

"character - Create a token from the tokenized string and return it

Let's add some tests to confirm our functionality.

// inside of `mod tests`, among the other tests

#[test]

fn just_ken() {

let input = String::from("\"ken\"");

let expected = [Token::String(String::from("ken"))];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

However, we're not covering all of the cases! What should happen if the input looked like this?

"no closing quote

This is not a valid JSON document because there isn't a closing quote character, so we need to return an error rather than a valid Token.

We'll need to rework the main loop of tokenize_string. Instead of breaking the loop when the index reaches the end of the input string, which naturally happened in the while loop, this case needs to be an error instead.

fn tokenize_string(chars: &Vec<char>, curr_idx: &mut usize) -> Result<Token, TokenizeError> {

// ...snip...

// replace the `while` loop with this loop

loop {

*curr_idx += 1;

if *curr_idx >= chars.len() {

return Err(TokenizeError::UnclosedQuotes);

}

let ch = chars[*curr_idx];

if ch == '"' {

break;

}

string.push(ch);

}

}

// add this new variant to the enum

pub enum TokenizeError {

// ...snip...

/// String was never completed

UnclosedQuotes,

}

We also could have instead kept the while loop and introduced a mutable boolean variable to track whether a closing quote had been seen. Either way works, as long as we return an error if the string isn't closed.

Finally, a test for an unclosed quote. Note that tests for errors still follow the same format, because errors are also data. This might be obvious to some readers, but it wasn't obvious to me at first, coming from languages where you have to test with assertions like expect(func()).toThrow().

// inside of `mod tests`, among the other tests

#[test]

fn unclosed_string() {

let input = String::from("\"unclosed");

let expected = Err(TokenizeError::UnclosedQuotes);

let actual = tokenize(input);

assert_eq!(actual, expected);

}

We are missing a pretty important part of string processing, which is "escape characters". For example:

"the cat says \"woof\""

Our tokenizing would currently do two things incorrectly here:

- It would include

\in the tokenized String - It would stop tokenizing when it reaches the

"before the "woof"

All of the possible escape characters, such as \n and \t can be found on JSON.org.

To handle these escape sequences, there are two main design possibilities - the tokenizing could handle it and produce a Token::String that is fully processed, or the tokenizing could understand just enough to not stop tokenizing when it hits a \" and handle the escape sequences later.

For this tutorial, we are going to capture the whole string in the token, without processing escape sequences, and process those when parsing the token. We do need to at least change to not stop tokenizing when it hits a \".

Just for fun, we can start implementing this by adding a test that will fail.

// inside of `mod tests`, among the other tests

#[test]

fn escaped_quote() {

let input = String::from(r#""the \" is OK""#);

let expected = [Token::String(String::from(r#"the \" is OK"#))];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

Repetitive code got you down?

There's going to be a bunch of Token::String(String::from("...")) throughout the rest of the tutorial. I don't particularly mind it, but I also think it's valid to want to reduce this. At least as an opportunity for an optional new concept 😃

New Concept: impl blocks

Functions can be associated to types by using an impl block.

// can put this below the `enum Token` definition

#[cfg(test)]

impl Token {

fn string(input: &str) -> Self {

Self::String(String::from(input))

}

}

This creates an associated function, i.e. a function associated with a type. As with before, the #[cfg(test)] makes this function only included when running tests. Note that the variant name is String and the function is string (case-sensitive).

Self is a "variable" that corresponds to the type of the impl block, so in this case, Self means Token.

This function can be called with Token::string("..."), so for example, we can change our existing test to:

// inside of `mod tests`, among the other tests

#[test]

fn just_ken() {

let input = String::from("\"ken\"");

let expected = [Token::string("ken")];

let actual = tokenize(input).unwrap();

assert_eq!(actual, expected);

}

A bit more concise, not a huge deal one way or the other, but a good opportunity for showing how the impl block for associated functions. The impl block can do more than this, read up about it if you're interested!

New Concept: raw string literal

The r# syntax at the front of the string and the # at the back in the previous example delimits a Raw string literal. These are nice because you don't have to escape quotes and backslashes for the Rust string.

Testing and keeping all this escaping straight is challenging, because you have to keep track of three layers: Rust string -> JSON document -> JSON String Token

At some point, a library author might seriously consider storing the input data for tests in separate *.json files to at least reduce the complexity of encoding the input data as a Rust string.

This test will fail, so let's implement the feature to make it pass. We're going to introduce a boolean variable to track whether we are in "escape mode" or not. The logic will be:

- If the character is a

"and the previous character was not a backslash, tokenizing for this string is done so break out of the loop - If the character is a

\, flip the "escape mode" boolean variable- This needs to flip the variable, not just set it to

true, because the second backslash of a\\sequence need to turn off the "escape mode"

- This needs to flip the variable, not just set it to

- Any character besides a

\turns off "escape mode"

There are three possibilities, so three arms in the match:

fn tokenize_string(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let mut string = String::new();

let mut is_escaping = false;

loop {

*index += 1;

if *index >= chars.len() {

return Err(TokenizeError::UnclosedQuotes);

}

let ch = chars[*index];

match ch {

'"' if !is_escaping => break,

'\\' => is_escaping = !is_escaping,

_ => is_escaping = false,

}

string.push(ch);

}

Ok(Token::String(string))

}

Feel free to use a different variable name for is_escaping, I'm not completely satisfied with that name.

New Concept: match guards

The if inside of the match arm is an example of a match guard, this is often a nice way to have the number of match arms exactly "match" (hehe) the number of possibilities in the logic.

The expression in the match guard can be arbitrarily complex, but it's best to not go too crazy in here for readability/maintainability.

Empty Space

We still have a lingering todo!() in the main match expression of make_token, and we haven't handled the very common scenario that a JSON document has empty space in it (space character, tab, newline, etc.).

fn make_token(chars: &Vec<char>, index: &mut usize) -> Result<Token, TokenizeError> {

let mut ch = chars[*index];

while ch.is_ascii_whitespace() {

*index += 1;

if *index >= chars.len() {

return Err(TokenizeError::UnexpectedEof);

}

ch = chars[*index];

}

let token = match ch {

// ...snip...

ch => return Err(TokenizeError::CharNotRecognized(ch)),

};

Ok(token)

}

// add these new variants to the enum

pub enum TokenizeError {

// ...snip...

/// The input ended early

UnexpectedEof,

/// Character is not part of a JSON token

CharNotRecognized(char),

}

Conclusion

We have now completed an implementation of tokenizing for JSON tokens, ideally as a practical example to teach Rust syntax, semantics, and language features.

Throughout this tutorial, you have learned __ new concepts! (plus or minus a few). That's really good! One thing that's neat about Rust is that there are a few broad themes that connect everything (ownership, borrowing) but besides that each feature is generally self-contained and can be taught on its own (if you want to sound fancy, you'd say that the features are mostly orthogonal to each other).

The next and last article in this series will contain much more code than this article, but fewer new concepts because most were learned already.